Computer Science > EXAMs > New York University CS-UY MISC Dibner 03/09/2019 (All)

New York University CS-UY MISC Dibner 03/09/2019

Document Content and Description Below

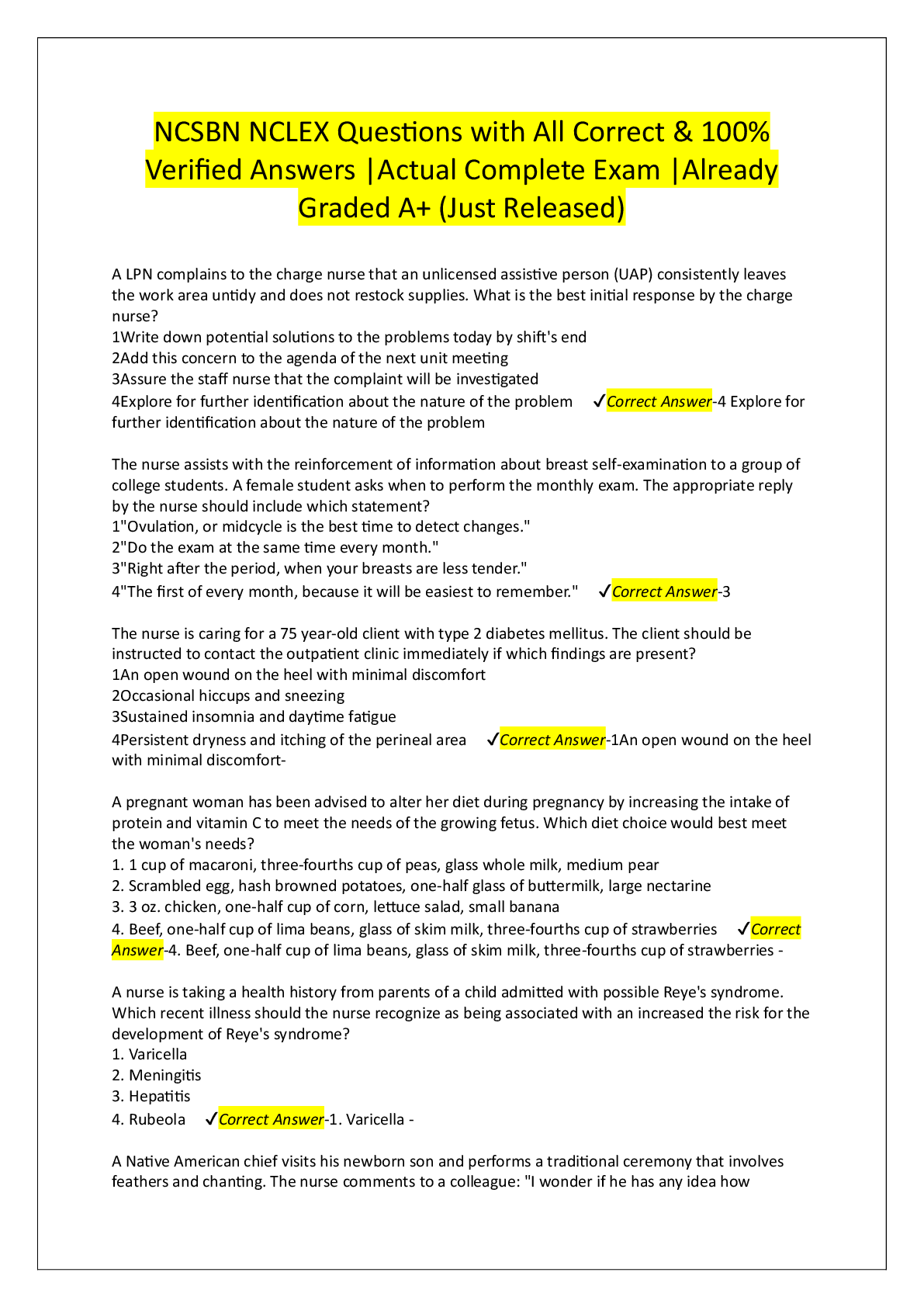

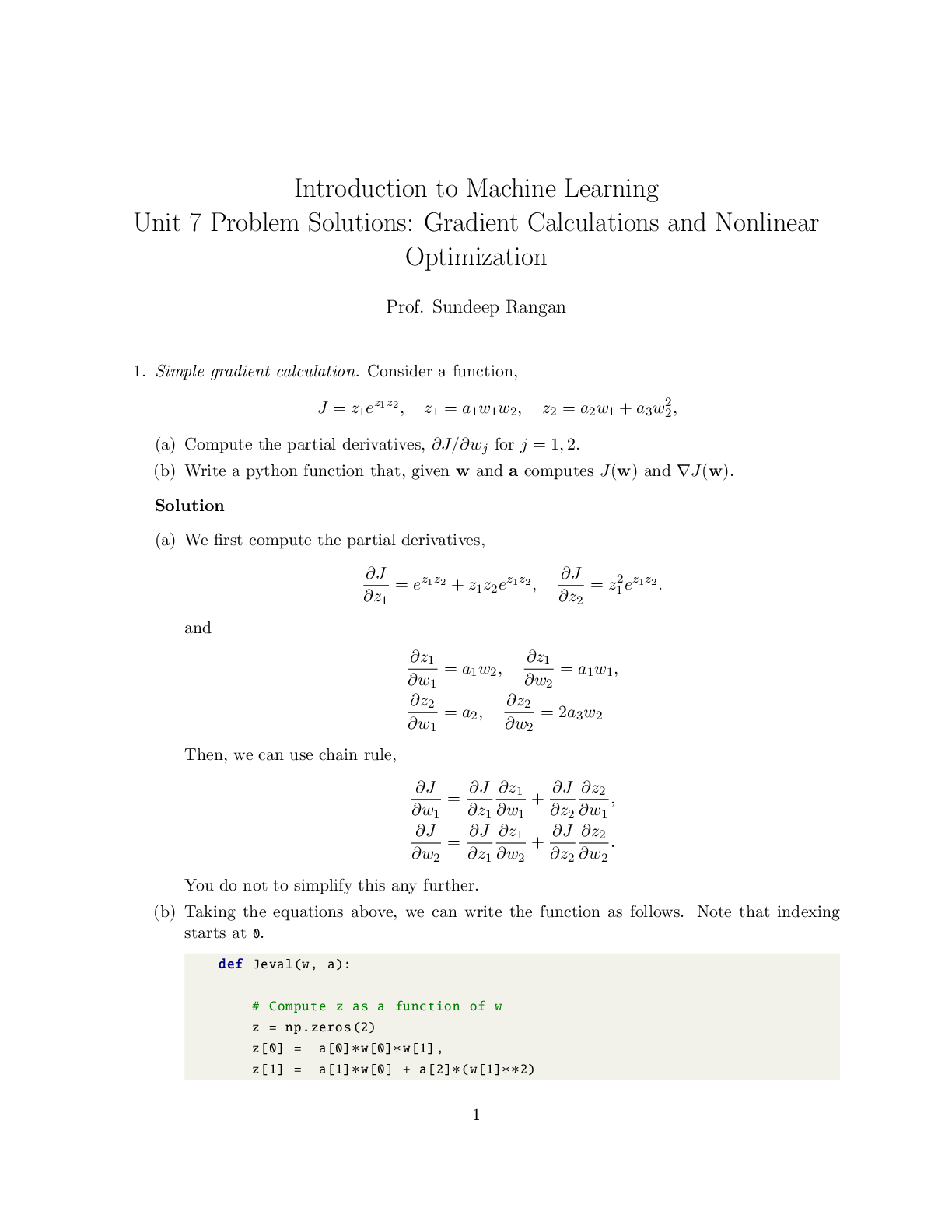

Introduction to Machine Learning Unit 7 Problem Solutions: Gradient Calculations and Nonlinear Optimization Prof. Sundeep Rangan 1. Simple gradient calculation. Consider a function, J = z1e z1z2 ... , z1 = a1w1w2, z2 = a2w1 + a3w 2 2 , (a) Compute the partial derivatives, ∂J/∂wj for j = 1, 2. (b) Write a python function that, given w and a computes J(w) and ∇J(w). Solution (a) We first compute the partial derivatives, ∂J ∂z1 = e z1z2 + z1z2e z1z2 , ∂J ∂z2 = z 2 1 e z1z2 . and ∂z1 ∂w1 = a1w2, ∂z1 ∂w2 = a1w1, ∂z2 ∂w1 = a2, ∂z2 ∂w2 = 2a3w2 Then, we can use chain rule, ∂J ∂w1 = ∂J ∂z1 ∂z1 ∂w1 + ∂J ∂z2 ∂z2 ∂w1 , ∂J ∂w2 = ∂J ∂z1 ∂z1 ∂w2 + ∂J ∂z2 ∂z2 ∂w2 . You do not to simplify this any further. (b) Taking the equations above, we can write the function as follows. Note that indexing starts at 0. def Jeval(w, a): # Compute z as a function of w z = np.zeros (2) z[0] = a[0]∗w[0]∗w[1], z[1] = a[1]∗w[0] + a[2]∗(w[1]∗∗2) [Show More]

Last updated: 3 years ago

Preview 1 out of 12 pages

Buy this document to get the full access instantly

Instant Download Access after purchase

Buy NowInstant download

We Accept:

Reviews( 0 )

$4.50

Can't find what you want? Try our AI powered Search

Document information

Connected school, study & course

About the document

Uploaded On

Nov 04, 2022

Number of pages

12

Written in

All

Additional information

This document has been written for:

Uploaded

Nov 04, 2022

Downloads

0

Views

47

.png)