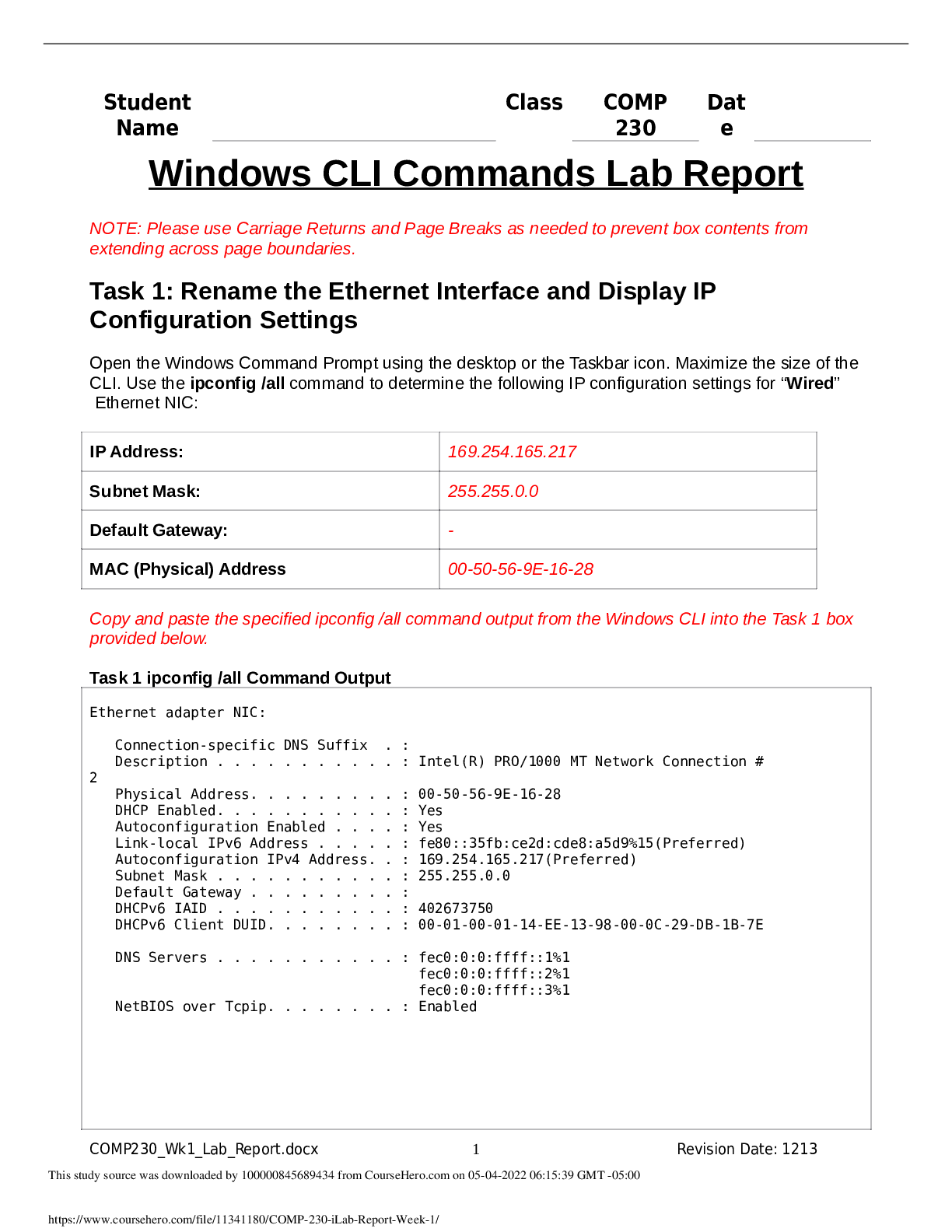

COMP 230 Week 1 Lab: Windows CLI Commands Lab Report (GRADED)

$ 8

PHARMACOLOGY PRE-ASSESSMENT QUIZ

$ 7

EMT Practice Test Questions with correctly solved solutions.

$ 16

GI/neuro quiz 4 practice questions Adult Health II (Chamberlain University)

$ 10

FDNY Probie School Week 1 Quiz Review | 40 Questions with 100% Correct Answers | Updated & Verified

$ 5

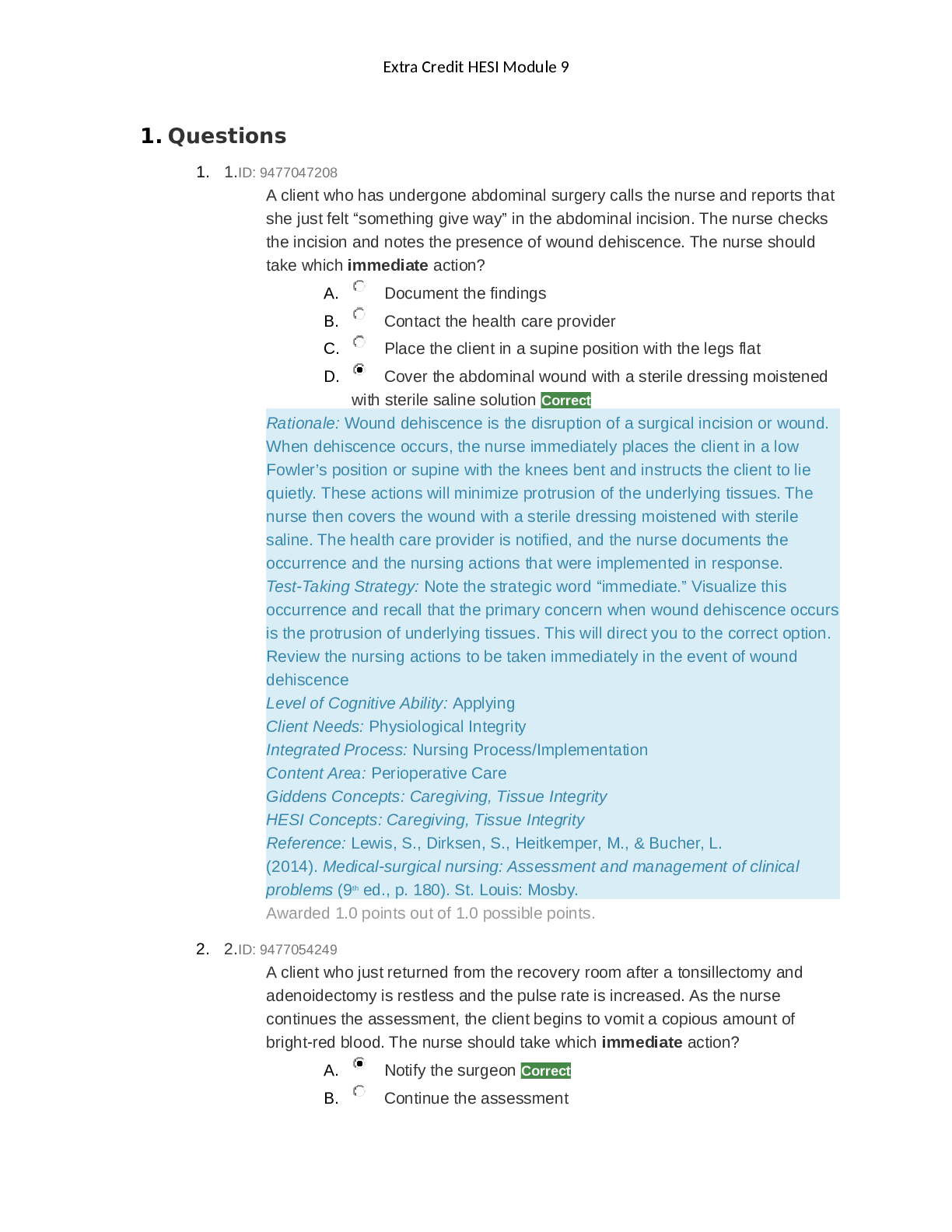

Extra Credit HESI Module 9 2022-2023

$ 14

.png)

WGU C224 Research Foundations Questions and Answers Already Passed

$ 15

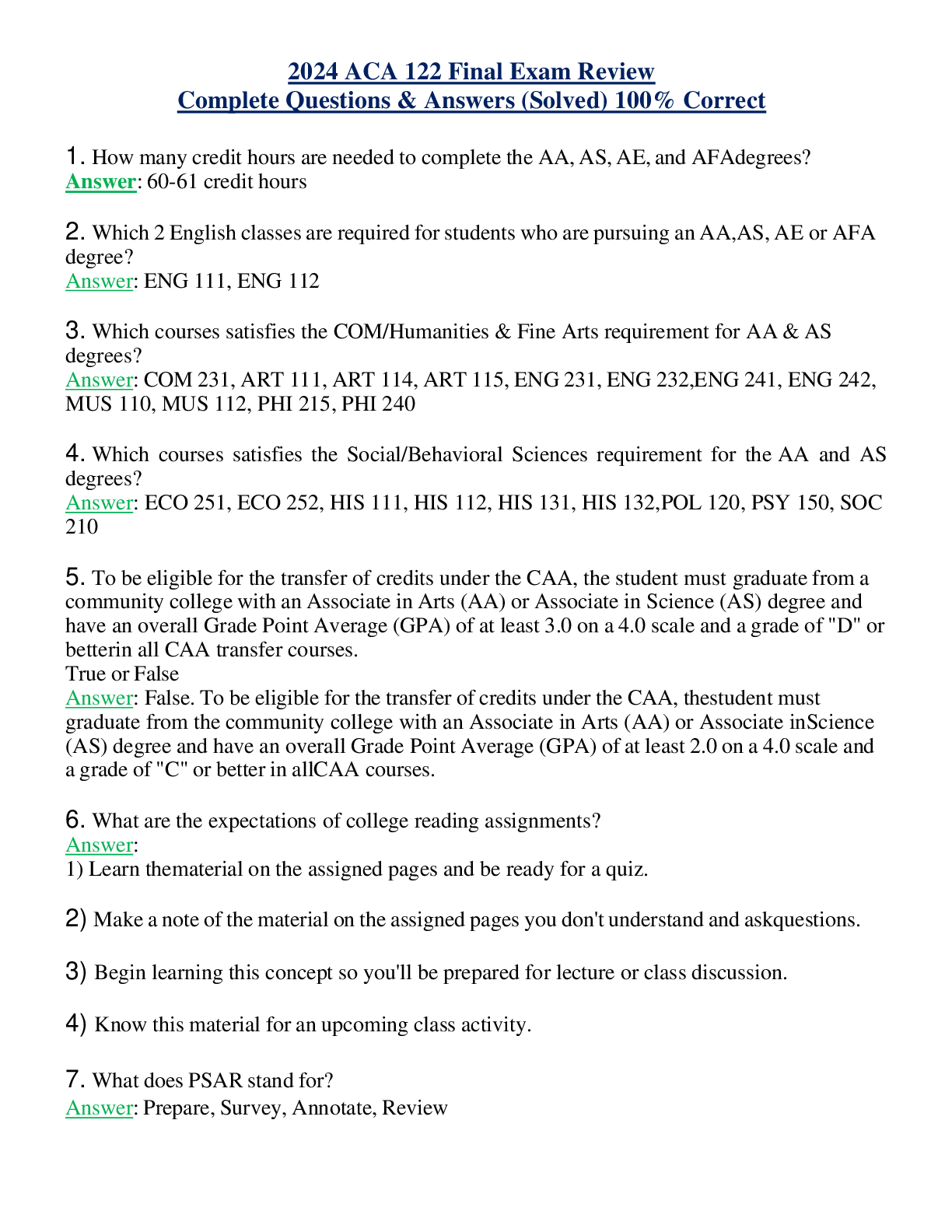

2024 ACA 122 Final Exam Review Complete Questions & Answers (Solved) 100% Correct

$ 9

COMP 230 Week 3 Course Project: Automating Administrative Tasks [GRADED A]

$ 12.5

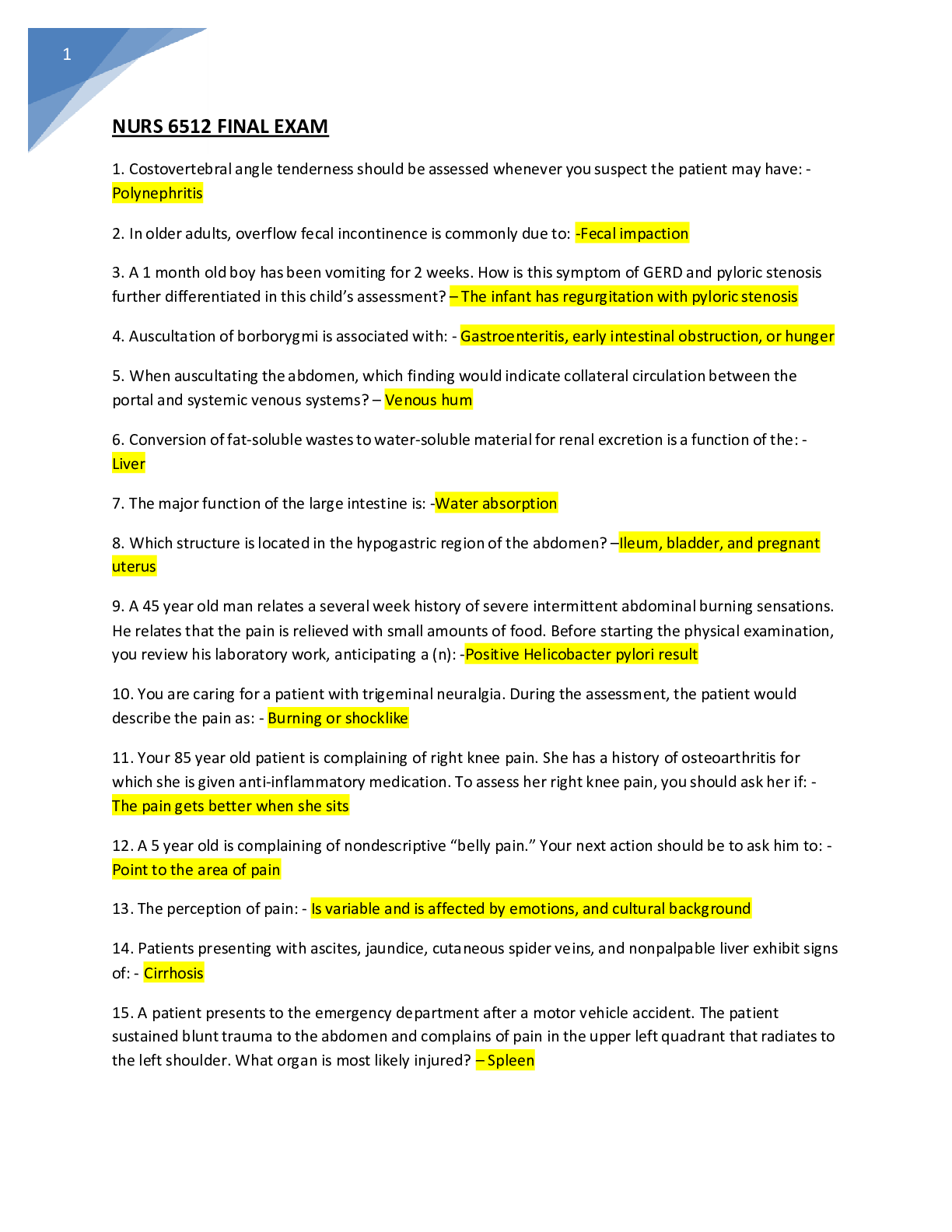

NURS 6512 FINAL EXAM

$ 16.5

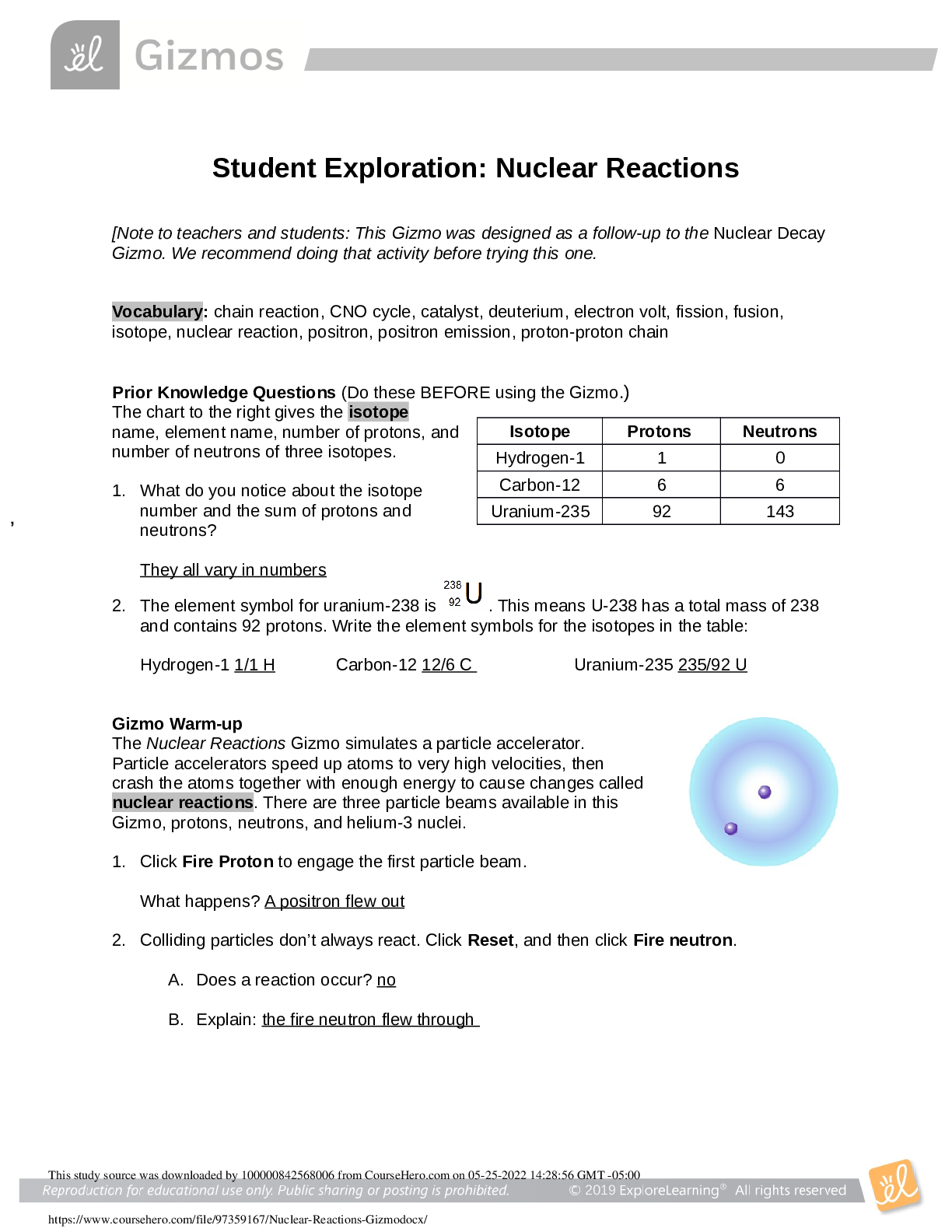

Student Exploration: Nuclear Reactions