Computer Science > QUESTIONS & ANSWERS > # Do not use packages that are not in standard distribution of python import numpy as np from ._base (All)

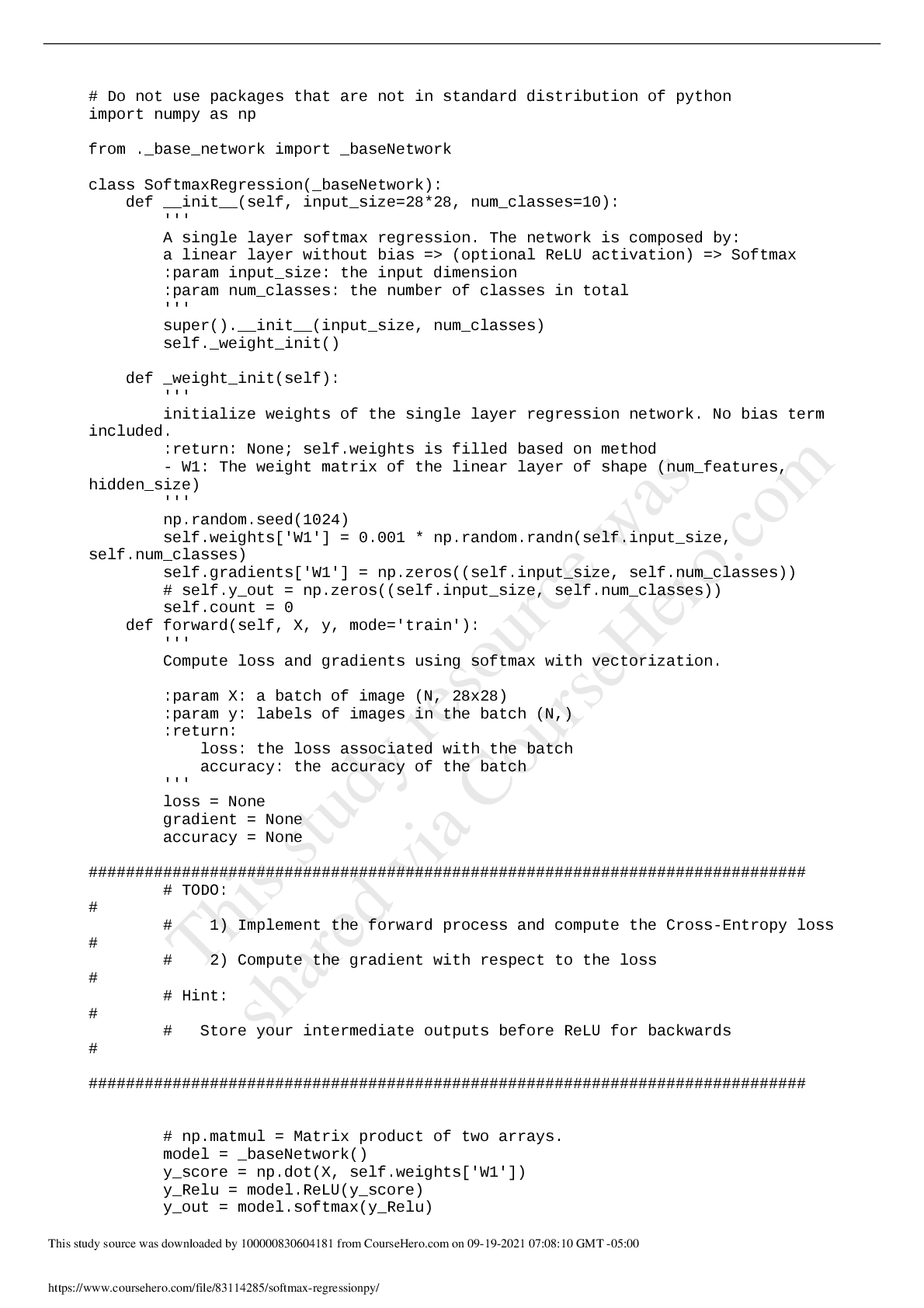

# Do not use packages that are not in standard distribution of python import numpy as np from ._base_network import _baseNetwork class SoftmaxRegression(_baseNetwork): def __init__(self, input_size=28*28, num_classes=10):

Document Content and Description Below

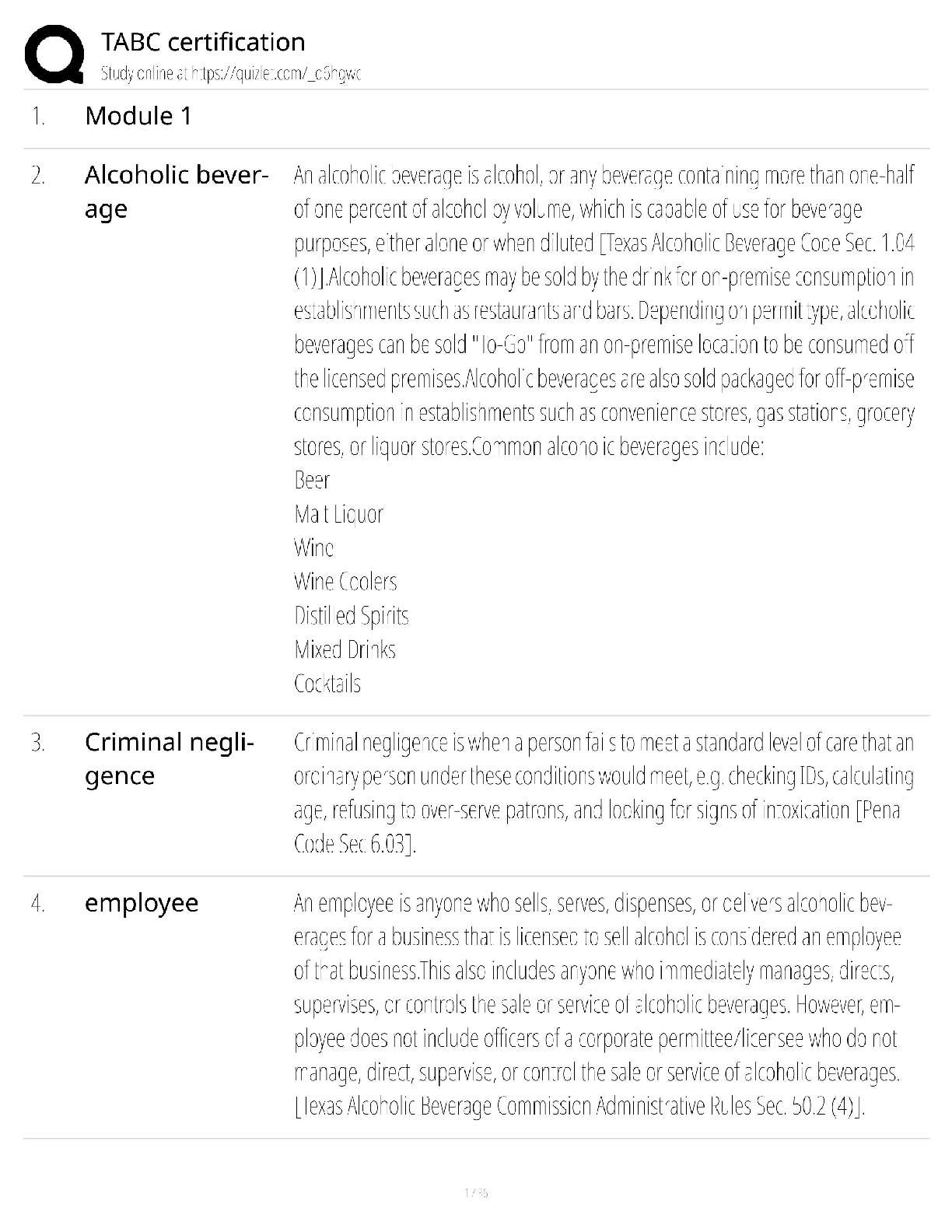

# Do not use packages that are not in standard distribution of python import numpy as np from ._base_network import _baseNetwork class SoftmaxRegression(_baseNetwork): def __init__(self, input_si ... ze=28*28, num_classes=10): ''' A single layer softmax regression. The network is composed by: a linear layer without bias => (optional ReLU activation) => Softmax :param input_size: the input dimension :param num_classes: the number of classes in total ''' super().__init__(input_size, num_classes) self._weight_init() def _weight_init(self): ''' initialize weights of the single layer regression network. No bias term included. :return: None; self.weights is filled based on method - W1: The weight matrix of the linear layer of shape (num_features, hidden_size) ''' np.random.seed(1024) self.weights['W1'] = 0.001 * np.random.randn(self.input_size, self.num_classes) self.gradients['W1'] = np.zeros((self.input_size, self.num_classes)) # self.y_out = np.zeros((self.input_size, self.num_classes)) self.count = 0 def forward(self, X, y, mode='train'): ''' Compute loss and gradients using softmax with vectorization. :param X: a batch of image (N, 28x28) :param y: labels of images in the batch (N,) :return: loss: the loss associated with the batch accuracy: the accuracy of the batch ''' loss = None gradient = None accuracy = None ############################################################################# # TODO: # # 1) Implement the forward process and compute the Cross-Entropy loss # # 2) Compute the gradient with respect to the loss # # Hint: # # Store your intermediate outputs before ReLU for backwards # ############################################################################# # np.matmul = Matrix product of two arrays. model = _baseNetwork() y_score = np.dot(X, self.weights['W1']) y_Relu = model.ReLU(y_score) y_out = model.softmax(y_Relu) This study source was downloaded by 100000830604181 from CourseHero.com on 09-19-2021 07:08:10 GMT -05:00 https://www.coursehero.com/file/83114285/softmax-regressionpy/ This study resou [Show More]

Last updated: 2 years ago

Preview 1 out of 3 pages

Buy this document to get the full access instantly

Instant Download Access after purchase

Buy NowInstant download

We Accept:

Reviews( 0 )

$7.00

Can't find what you want? Try our AI powered Search

Document information

Connected school, study & course

About the document

Uploaded On

Jan 13, 2023

Number of pages

3

Written in

All

Additional information

This document has been written for:

Uploaded

Jan 13, 2023

Downloads

0

Views

67