Computer Science > Quiz > Georgia Institute Of Technology Deep Learning CS7643_Quiz3_1 (All)

Georgia Institute Of Technology Deep Learning CS7643_Quiz3_1

Document Content and Description Below

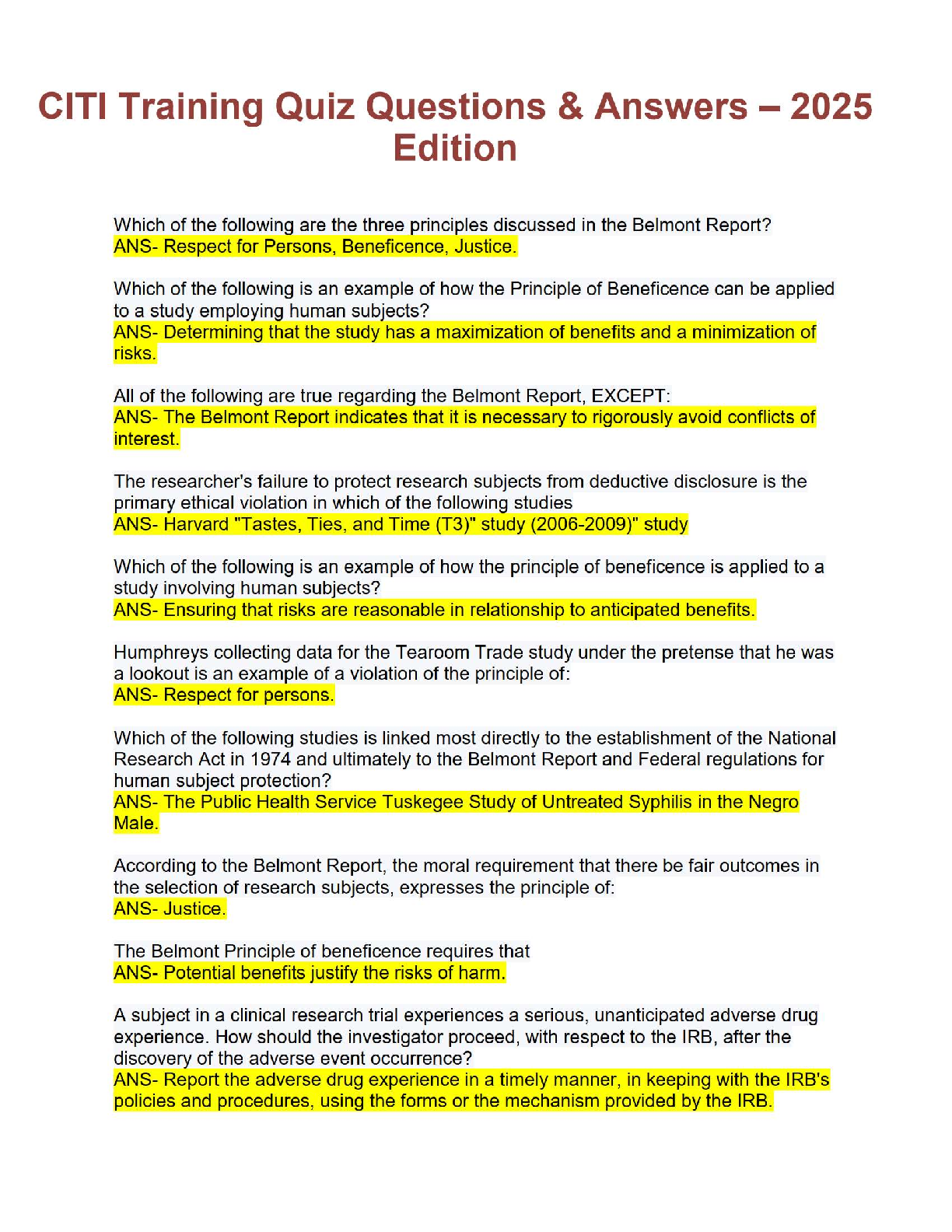

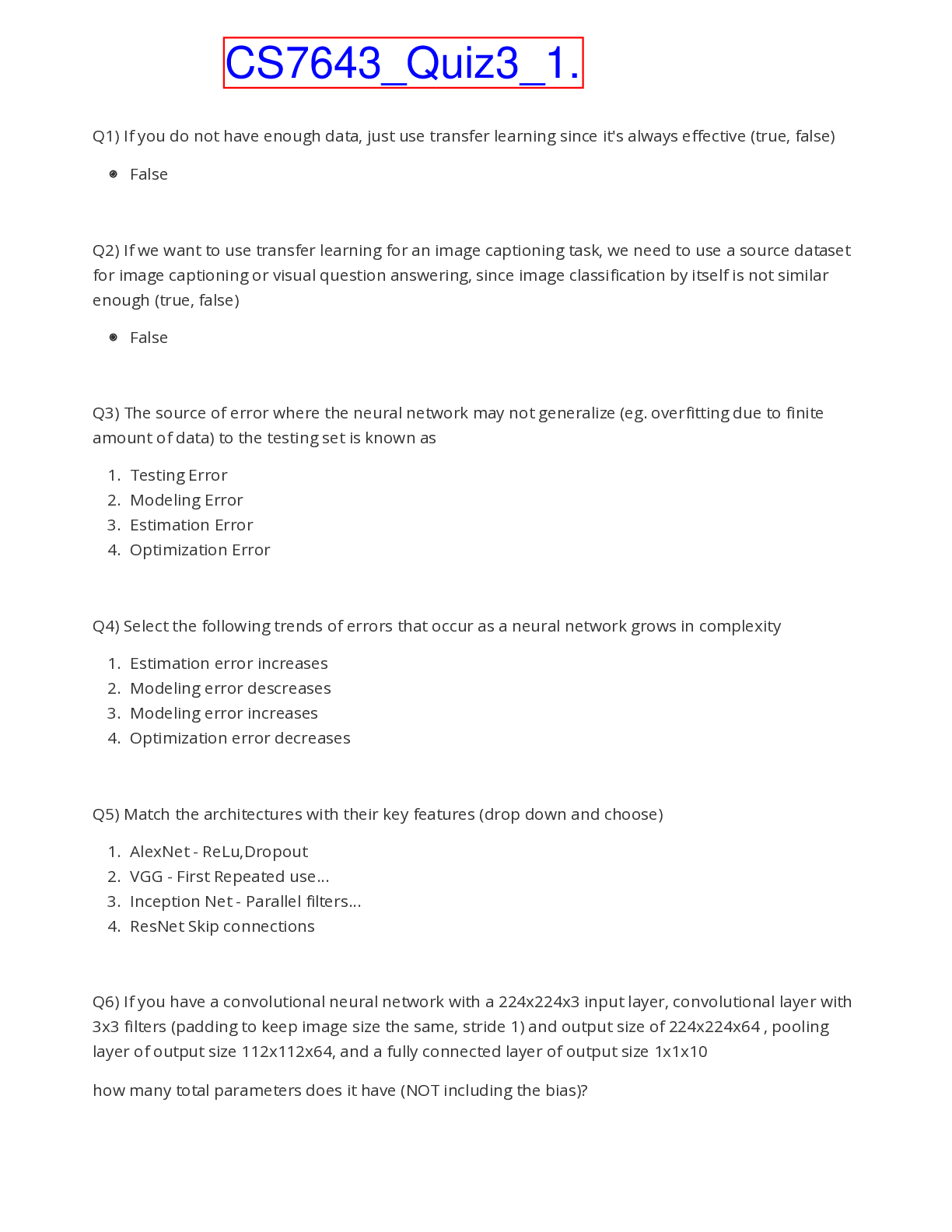

1) If you do not have enough data, just use transfer learning since it's always effective (true, false) Q2) If we want to use transfer learning for an image captioning task, we need to use a sou ... rce dataset for image captioning or visual question answering, since image classification by itself is not similar enough (true, false) Q3) The source of error where the neural network may not generalize (eg. overfitting due to finite amount of data) to the testing set is known as 1. Testing Error 2. Modeling Error 3. Estimation Error 4. Optimization Error Q4) Select the following trends of errors that occur as a neural network grows in complexity 1. Estimation error increases 2. Modeling error descreases 3. Modeling error increases 4. Optimization error decreases Q5) Match the architectures with their key features (drop down and choose) 1. AlexNet - ReLu,Dropout 2. VGG - First Repeated use... 3. Inception Net - Parallel filters... 4. ResNet Skip connections Q6) If you have a convolutional neural network with a 224x224x3 input layer, convolutional layer with 3x3 filters (padding to keep image size the same, stride 1) and output size of 224x224x64 , pooling layer of output size 112x112x64, and a fully connected layer of output size 1x1x10 how many total parameters does it have (NOT including the bias)? Q7) If you have a convolutional neural network with a 224x224x3 input layer, convolutional layer with 3x3 filters (padding to keep image size the same, stride 1) output size of 224x224x64 , pooling layer of output size 112x112x64 , and a fully connected layer of output size 1x1x10 ,assuming batch size of 1 how much memory (where memory is defined as number of values) does it use for forward activations (include the image)? Q8) computation Given dH, the 2x2 gradient of the cost with respect to the convolutional layer H, and X, the 3x3 output activations of the previous layer, perform the backward pass and return dW22, the gradient of the cost with respect to the weights W . Note that we want the resulting value at [2,2] (indexing starts at 1) of the gradient dW. Assume the usual convolutional layer implementation (forward pass is cross-correlation). Q9) compuuation Given dH, the 2x2 gradient of the cost with respect to the convolutional layer H, and the 2x2 weights W, perform the backward pass and return dX22, the gradient of the cost with respect to X, the output activations from the previous layer. Note that we want the resulting value at [2,2] (indexing starts at 1) of the gradient dX. Assume the usual convolutional layer implementation (forward pass is cross-correlation). [Show More]

Last updated: 3 years ago

Preview 1 out of 4 pages

Buy this document to get the full access instantly

Instant Download Access after purchase

Buy NowInstant download

We Accept:

Reviews( 0 )

$8.00

Can't find what you want? Try our AI powered Search

Document information

Connected school, study & course

About the document

Uploaded On

May 13, 2022

Number of pages

4

Written in

All

Additional information

This document has been written for:

Uploaded

May 13, 2022

Downloads

1

Views

410

.png)