Computer Science > QUESTIONS & ANSWERS > COMPUTER S 123 F29AI – Artificial Intelligence and Intelligent Agents Lab 6 – MDPs Q&A (All)

COMPUTER S 123 F29AI – Artificial Intelligence and Intelligent Agents Lab 6 – MDPs Q&A

Document Content and Description Below

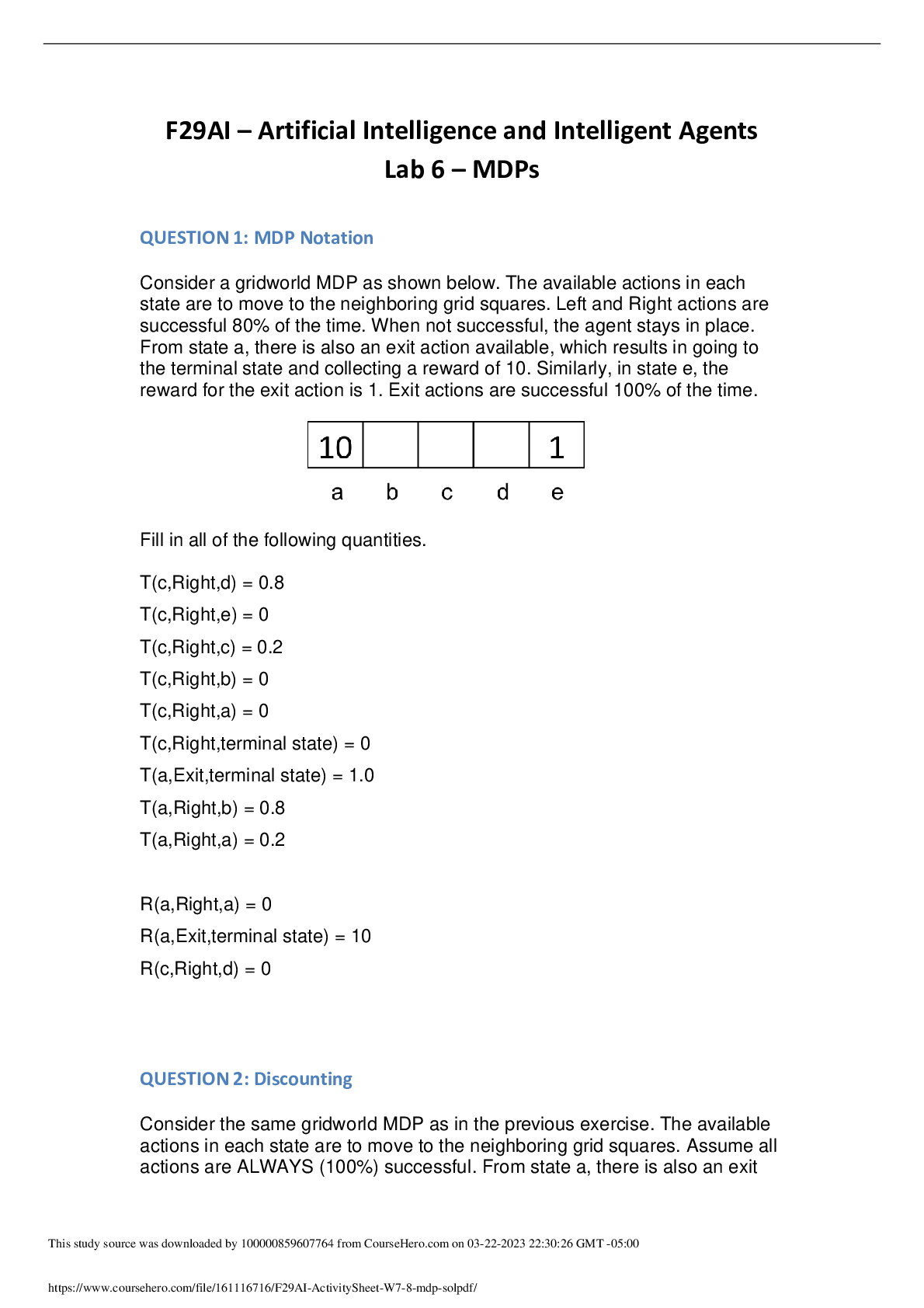

F29AI – Artificial Intelligence and Intelligent Agents Lab 6 – MDPs QUESTION 1: MDP Notation Consider a gridworld MDP as shown below. The available actions in each state are to move to the nei ... ghboring grid squares. Left and Right actions are successful 80% of the time. When not successful, the agent stays in place. From state a, there is also an exit action available, which results in going to the terminal state and collecting a reward of 10. Similarly, in state e, the reward for the exit action is 1. Exit actions are successful 100% of the time. Fill in all of the following quantities. QUESTION 2: Discounting Consider the same gridworld MDP as in the previous exercise. The available actions in each state are to move to the neighboring grid squares. Assume all actions are ALWAYS (100%) successful. From state a, there is also an exit action available, which results in going to the terminal state and collecting a reward of 10. Similarly, in state e, the reward for the exit action is 1. Exit actions are also successful 100% of the time. Note that the agent is now in state d, as shown below. Part 1 Let the discount factor γ=0.1. What is the optimal action in the current state (d)? (Hint: Write down the computation of the discounted reward) • Left (0*1 + 0*0.1^1 + 0*0.1^2 + 10*0.1^3 = 0.01) Part 2 Now let the discount factor γ=0.9999. What is the optimal action in the current state (d)? (Hint: Write down the computation of the discounted reward) • Right (0*1 + 1*0.9999^1 = 0.9999) QUESTION 4: Solving MDPs Consider the same gridworld MDP again, except that now Left and Right actions are 100% successful. Specifically, the available actions in each state are to move to the neighboring grid squares. From state a, there is also an exit action available, which results in going to the terminal state and collecting a reward of 10. Similarly, in state e, the reward for the exit action is 1. Exit actions are successful 100% of the time. Let the discount factor γ=1. Fill in the following quantities. Answer: The MDP is completely deterministic. And there is no discount. This means, intuitively, that the optimal action from all of the states b, c, d & e is to move left to get to the 10, and exit from a. Let’s see how this comes out in the numbers. Note that in all the caculations in the table below, we are considering both the left and the right actions, and picking the maximum. This is shown in bold. We don’t here who the calculation for the suboptimal action. a b c d e V0 0 0 0 0 0 QUESTION 5: Consider the following Markov Decision Process (MDP) of a robot running with an ice-cream: Consider the following Markov Decision Process (MDP) of a robot race: The robot can either go fast or go slow. The states represent battery life remaining: fully charged (F), decreasing (D), or empty battery (E). Going fast brings double the reward than going slow, but also decreases battery life. This might eventually lead to an empty battery. Assume no discount of future actions ( = 1.0) and a living reward of zero. (i) Compute the time limited value for 4 time steps using the Value Iteration algorithm, and fill the table below. (ii) What is the optimal policy in your own words? Fill the values below: *(F) = Fast *(D) = Slow In plain language, the optimal policy is: when your battery is full, go fast. When it’s decreasing, go slow. [Show More]

Last updated: 2 years ago

Preview 1 out of 4 pages

Buy this document to get the full access instantly

Instant Download Access after purchase

Buy NowInstant download

We Accept:

Reviews( 0 )

$9.00

Can't find what you want? Try our AI powered Search

Document information

Connected school, study & course

About the document

Uploaded On

Mar 23, 2023

Number of pages

4

Written in

All

Additional information

This document has been written for:

Uploaded

Mar 23, 2023

Downloads

0

Views

116