Computer Science > QUESTIONS & ANSWERS > CS 189 Introduction to Machine Learning Q&A | University of California, Berkeley (All)

CS 189 Introduction to Machine Learning Q&A | University of California, Berkeley

Document Content and Description Below

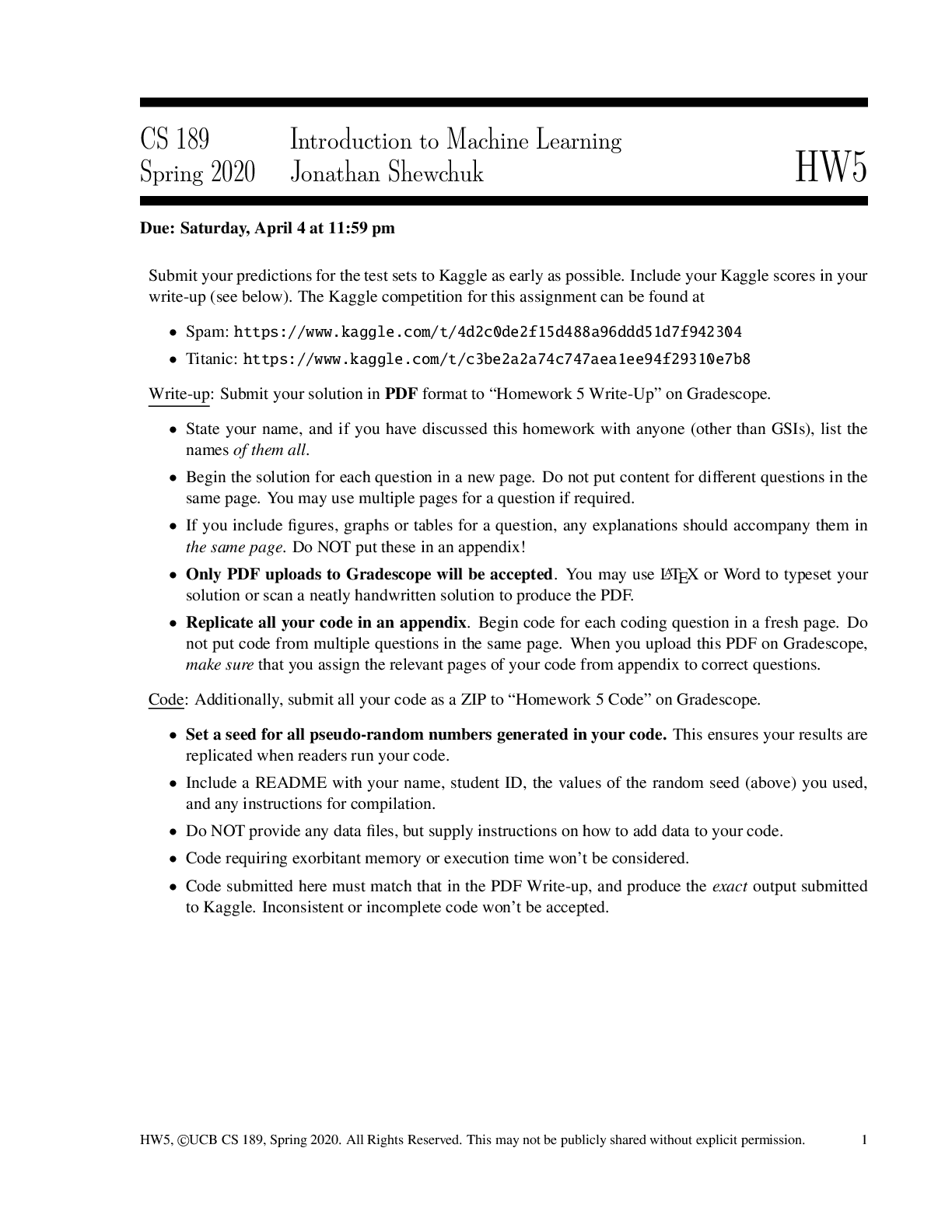

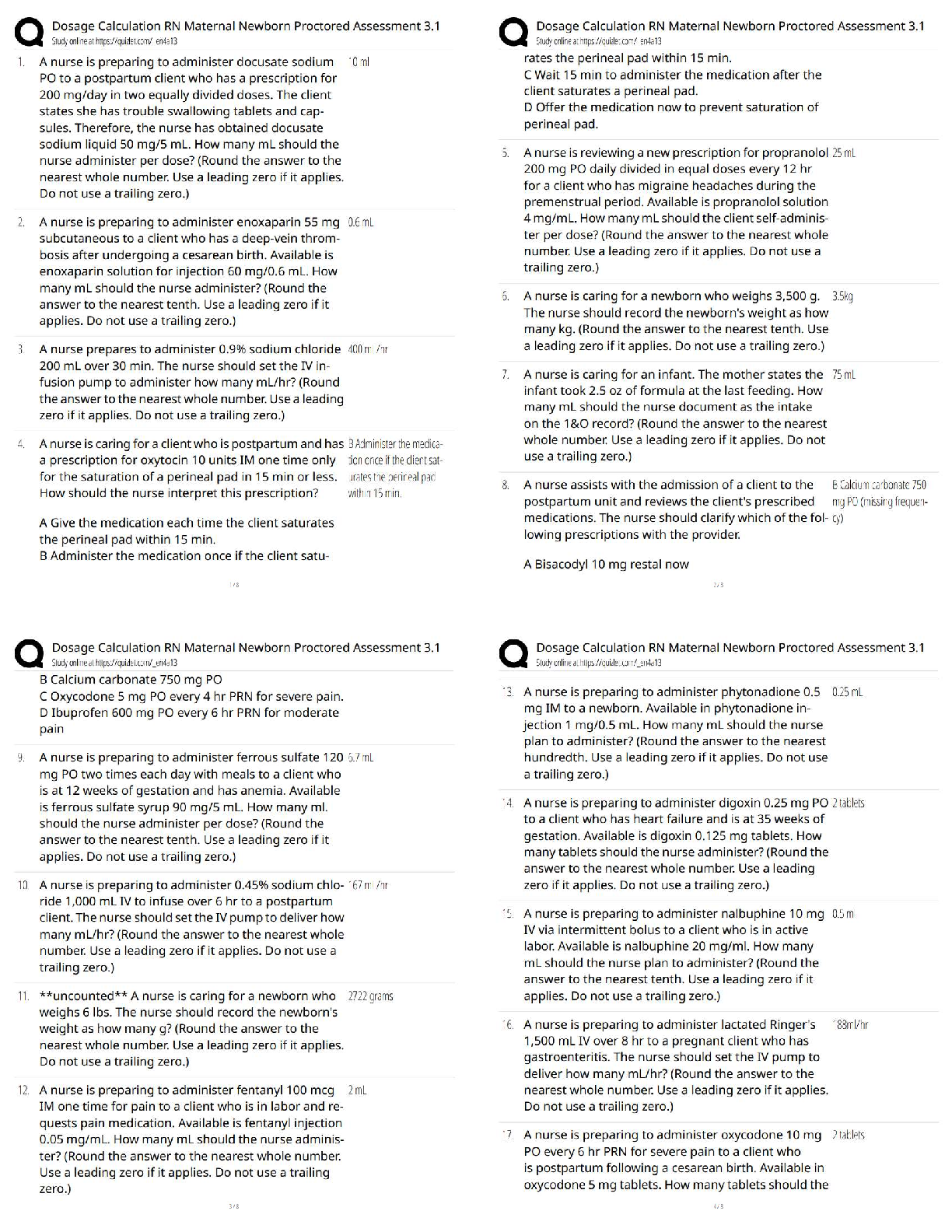

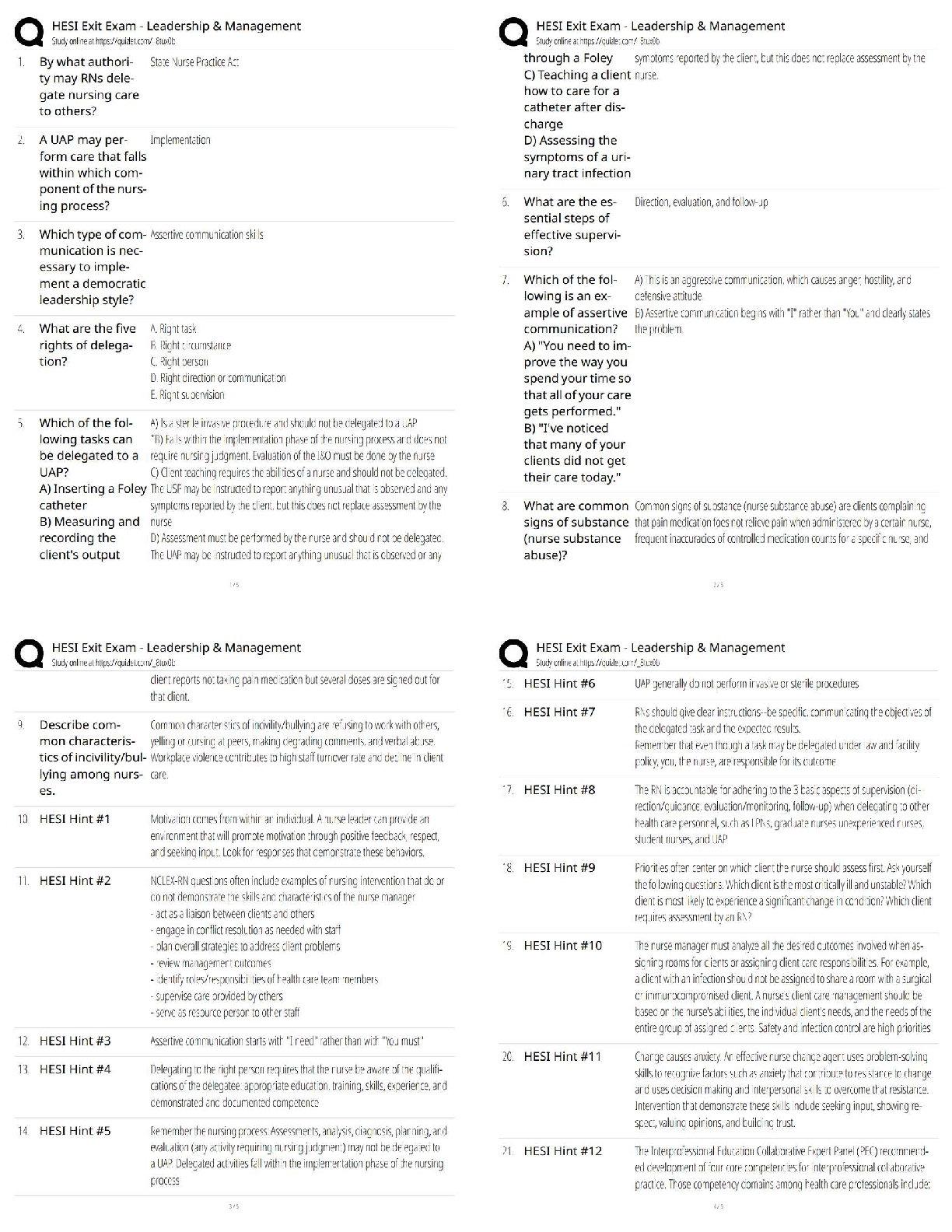

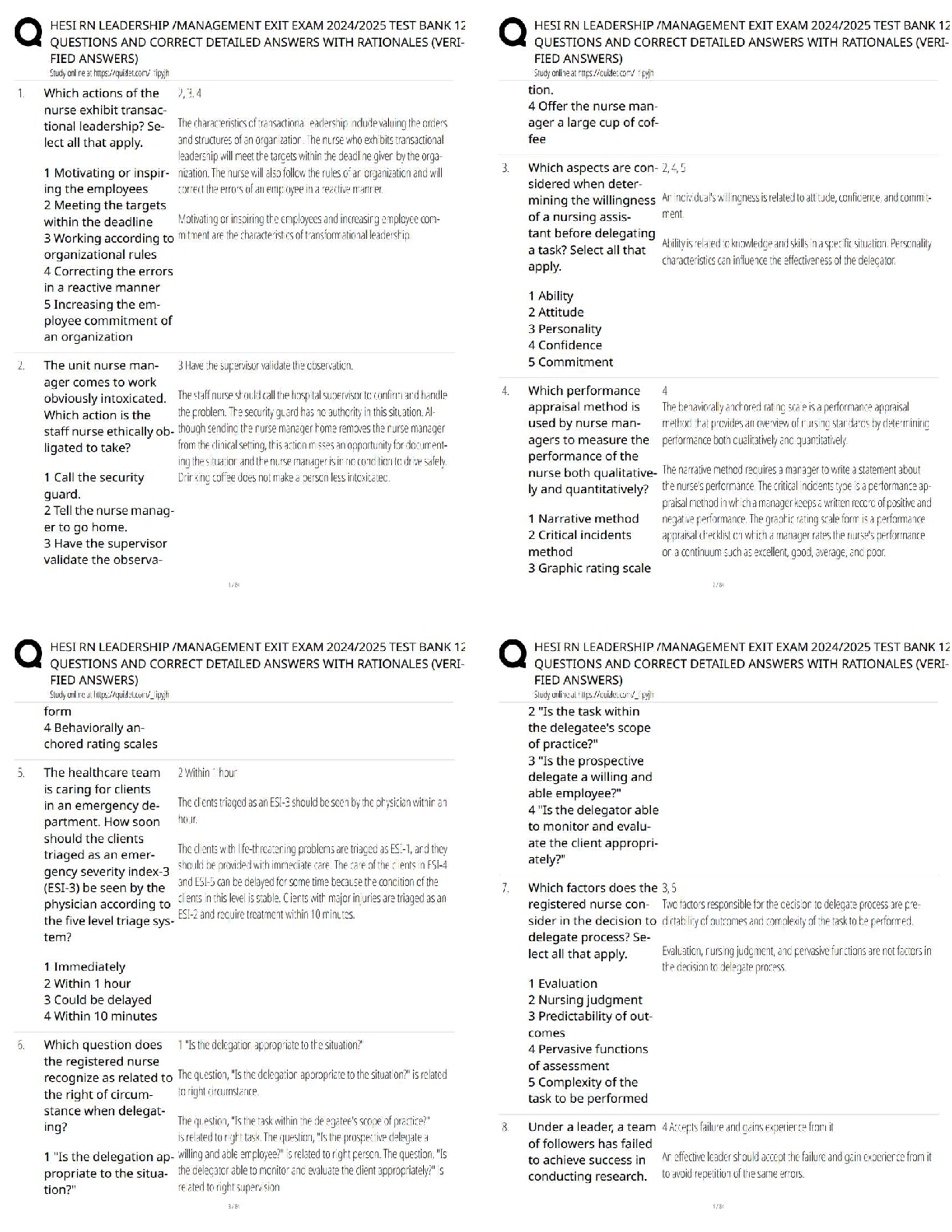

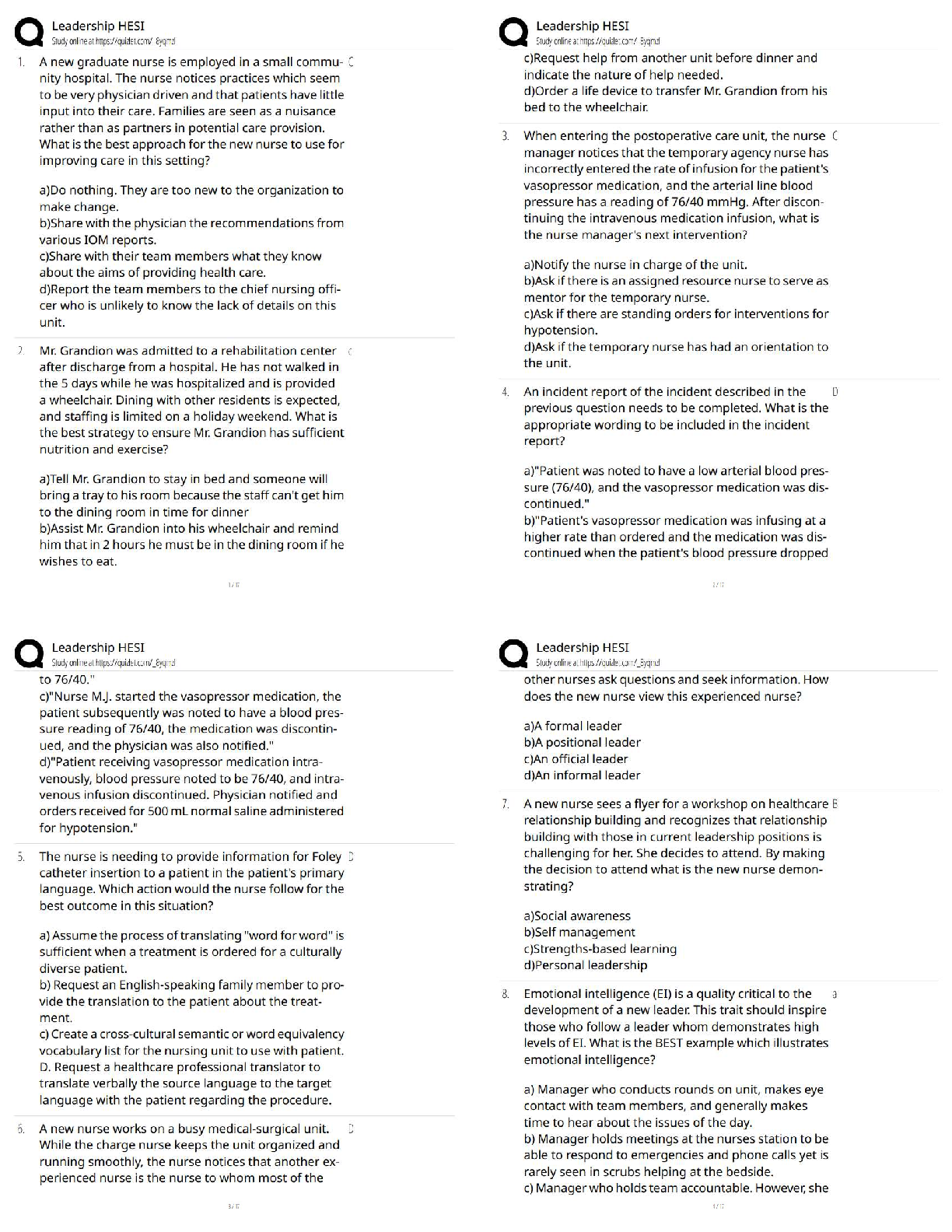

CS 189 Introduction to Machine Learning Spring 2020 HW5 Due: Saturday, April 4 at 11:59 pm Submit your predictions for the test sets to Kaggle as early as possible. Include your Kaggle scores in you ... r write-up (see below). The Kaggle competition for this assignment can be found at • Spam: https://www.kaggle.com/t/4d2c0de2f15d488a96ddd51d7f942304 • Titanic: https://www.kaggle.com/t/c3be2a2a74c747aea1ee94f29310e7b8 Write-up: Submit your solution in PDF format to “Homework 5 Write-Up” on Gradescope. • State your name, and if you have discussed this homework with anyone (other than GSIs), list the names of them all. • Begin the solution for each question in a new page. Do not put content for different questions in the same page. You may use multiple pages for a question if required. • If you include figures, graphs or tables for a question, any explanations should accompany them in the same page. Do NOT put these in an appendix! • Only PDF uploads to Gradescope will be accepted. You may use LATEX or Word to typeset your solution or scan a neatly handwritten solution to produce the PDF. • Replicate all your code in an appendix. Begin code for each coding question in a fresh page. Do not put code from multiple questions in the same page. When you upload this PDF on Gradescope, make sure that you assign the relevant pages of your code from appendix to correct questions. Code: Additionally, submit all your code as a ZIP to “Homework 5 Code” on Gradescope. • Set a seed for all pseudo-random numbers generated in your code. This ensures your results are replicated when readers run your code. • Include a README with your name, student ID, the values of the random seed (above) you used, and any instructions for compilation. • Do NOT provide any data files, but supply instructions on how to add data to your code. • Code requiring exorbitant memory or execution time won’t be considered. • Code submitted here must match that in the PDF Write-up, and produce the exact output submitted to Kaggle. Inconsistent or incomplete code won’t be accepted. HW5, 'UCB CS 189, Spring 2020. All Rights Reserved. This may not be publicly shared without explicit permission. 1 1 Honor Code Declare and sign the following statement: “I certify that all solutions in this document are entirely my own and that I have not looked at anyone else’s solution. I have given credit to all external sources I consulted.” While discussions are encouraged, everything in your solution must be your (and only your) creation. Furthermore, all external material (i.e., anything outside lectures and assigned readings, including figures and pictures) should be cited properly. We wish to remind you that consequences of academic misconduct are particularly severe! 2 Random Forest Motivation Ensemble learning is a general technique to combat overfitting, by combining the predictions of many varied models into a single prediction based on their average or majority vote. (a) The motivation of averaging. Consider a set of uncorrelated random variables fYign i=1 with mean µ and variance σ2. Calculate the expectation and variance of their average. (In the context of ensemble methods, these Yi are analogous to the prediction made by classifier i. ) (b) Ensemble Learning – Bagging. In lecture, we covered bagging (Bootstrap Aggregating). Bagging is a randomized method for creating many different learners from the same data set. Given a training set of size n, generate B random subsamples of size n0 by sampling with replacement. Some points may be chosen multiple times, while some may not be chosen at all. If n0 = n, around 63% are chosen, and the remaining 37% are called out-of-bag (OOB) samples. (a) Why 63%? (b) If we use bagging to train our model, How should we choose the hyperparameter B? Recall, B is the number of subsamples, and typically, a few hundred to several thousand trees are used, depending on the size and nature of the training set. HW5, 'UCB CS 189, Spring 2020. All Rights Reserved. This may not be publicly shared without explicit permission. 2 (c) In part (a), we see that averaging reduces variance for uncorrelated classifiers. Real world prediction will of course not be completely uncorrelated, but reducing correlation will generally reduce the final variance. Reconsider a set of correlate random variables fZign i=1. Suppose 8i , j, Corr(Zi; Zj) = ρ. Calculate the variance of their average. (d) Is a random forest of stumps (trees with a single feature split or height 1) a good idea in general? Does the performance of a random forest of stumps depend much on the number of trees? Think about the bias of each individual tree and the bias of the average of all these random stumps. 3 Decision Trees for Classification In this problem, you will implement decision trees and random forests for classification on three datasets: 1) the spam dataset, and 2) a Titanic dataset to predict Titanic survivors. The data is with the assignment. In lectures, you were given a basic introduction to decision trees and how such trees are trained. You were also introduced to random forests. Feel free to research different decision tree techniques online. You do not have to implement boosting, though it might help with Kaggle. 3.1 Implement Decision Trees See the Appendix for more information. You are not allowed to use any off-the-shelf decision tree implementation. Some of the datasets are not “cleaned,” i.e., there are missing values, so you can use external libraries for data preprocessing and tree visualization (in fact, we recommend it). Be aware that some of the later questions might require special functionality that you need to implement (e.g., max depth stopping criterion, visualizing the tree, tracing the path of a sample through the tree). You can use any programming language you wish as long as we can read and run your code with minimal effort. In this part of your writeup, include your decision tree code. . HW5, 'UCB CS 189, Spring 2020. All Rights Reserved. This may not be publicly shared without explicit permission. 3 3.2 Implement Random Forests You are not allowed to use any off-the-shelf random forest implementation. If you architected your code well, this part should be a (relatively) easy encapsulation of the previous part. In this part of your writeup, include your random forest code. 3.3 Describe implementation details We aren’t looking for an essay; 1–2 sentences per question is enough. 1. How did you deal with categorical features and missing values? 2. What was your stopping criterion? 3. How did you implement random forests? 4. Did you do anything special to speed up training? 5. Anything else cool you implemented? HW5, 'UCB CS 189, Spring 2020. All Rights Reserved. This may not be publicly shared without explicit permission. 4 3.4 Performance Evaluation For each of the 2 datasets, train both a decision tree and random forest and report your training and validation accuracies. You should be reporting 8 numbers (2 datasets × 2 classifiers × training/validation). In addition, for both datasets, train your best model and submit your predictions to Kaggle. Include your Kaggle display name and your public scores on each dataset. You should be reporting 2 Kaggle scores. 3.5 Writeup Requirements for the Spam Dataset 1. (Optional) If you use any other features or feature transformations, explain what you did in your report. You may choose to use something like bag-of-words. You can implement any custom feature extraction code in featurize.py, which will save your features to a .mat file. 2. For your decision tree, and for a data point of your choosing from each class (spam and ham), state the splits (i.e., which feature and which value of that feature to split on) your decision tree made to classify it. An example of what this might look like: (a) (“viagra”) ≥ 2 (b) (“thanks”) < 1 (c) (“nigeria”) ≥ 3 (d) Therefore this email was spam. (a) (“budget”) ≥ 2 (b) (“spreadsheet”) ≥ 1 (c) Therefore this email was ham. HW5, 'UCB CS 189, Spring 2020. All Rights Reserved. This may not be publicly shared without explicit permission. 5 . 3. For random forests, find and state the most common splits made at the root node of the trees. For example: (a) (“viagra”) ≥ 3 (20 trees) (b) (“thanks”) < 4 (15 trees) (c) (“nigeria”) ≥ 1 (5 trees) 4. Generate a random 80/20 training/validation split. Train decision trees with varying maximum depths (try going from depth = 1 to depth = 40) with all other hyperparameters fixed. Plot your validation accuracies as a function of the depth. Which depth had the highest validation accuracy? Write 1–2 sentences explaining the behavior you observe in your plot. If you find that you need to plot more depths, feel free to do so. Solution: 3.6 Writeup Requirements for the Titanic Dataset Train a very shallow decision tree (for example, a depth 3 tree, although you may choose any depth that looks good) and visualize your tree. Include for each non-leaf node the feature name and the split rule, and include for leaf nodes the class your decision tree would assign. You can use any visualization method you want, from simple printing to an external library; the rcviz library on github works well. HW5, 'UCB CS 189, Spring 2020. All Rights Reserved. This may not be publicly shared without explicit permission. 6 [Show More]

Last updated: 8 months ago

Preview 3 out of 11 pages

Loading document previews ...

Buy this document to get the full access instantly

Instant Download Access after purchase

Buy NowInstant download

We Accept:

Reviews( 0 )

$7.00

Can't find what you want? Try our AI powered Search

Document information

Connected school, study & course

About the document

Uploaded On

Mar 23, 2023

Number of pages

11

Written in

All

Additional information

This document has been written for:

Uploaded

Mar 23, 2023

Downloads

0

Views

157