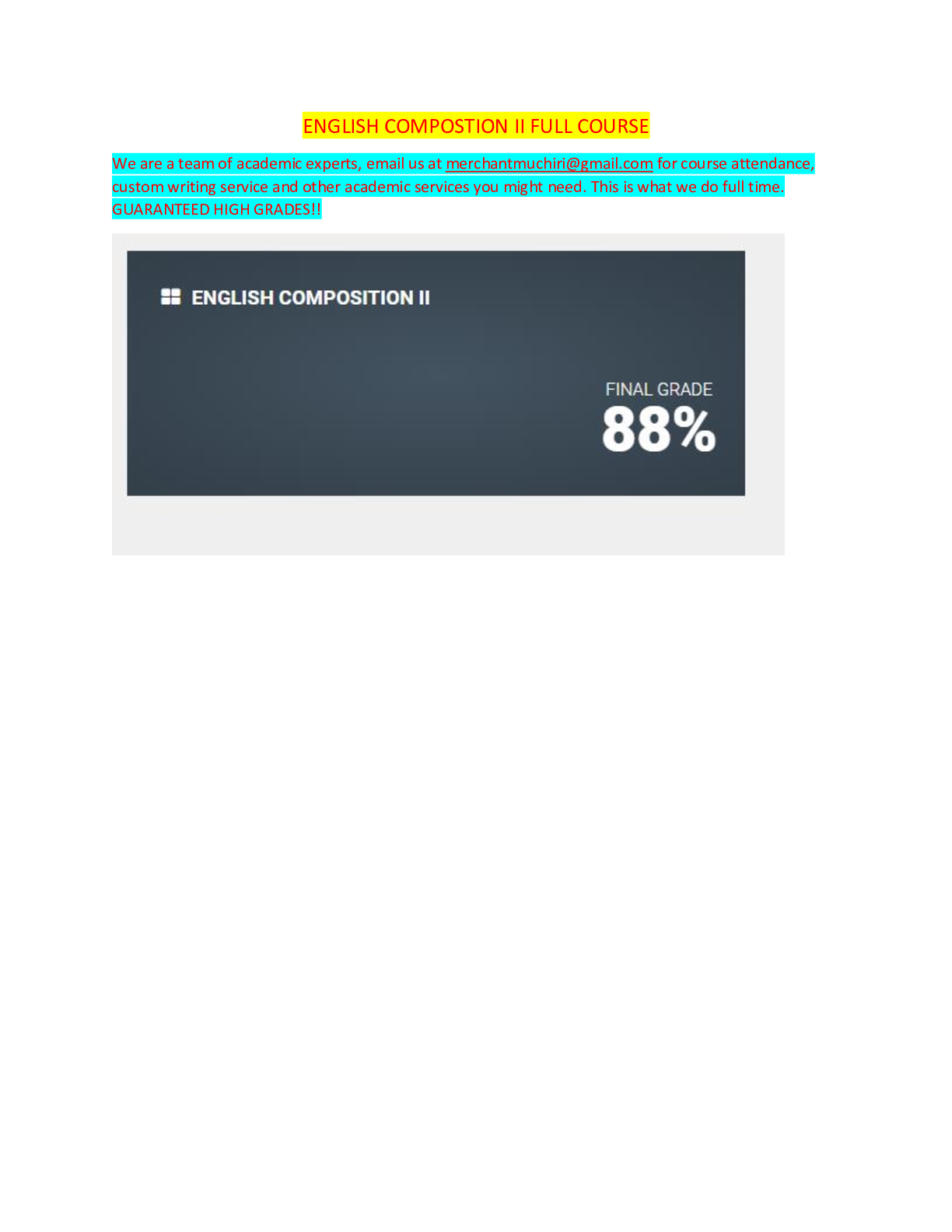

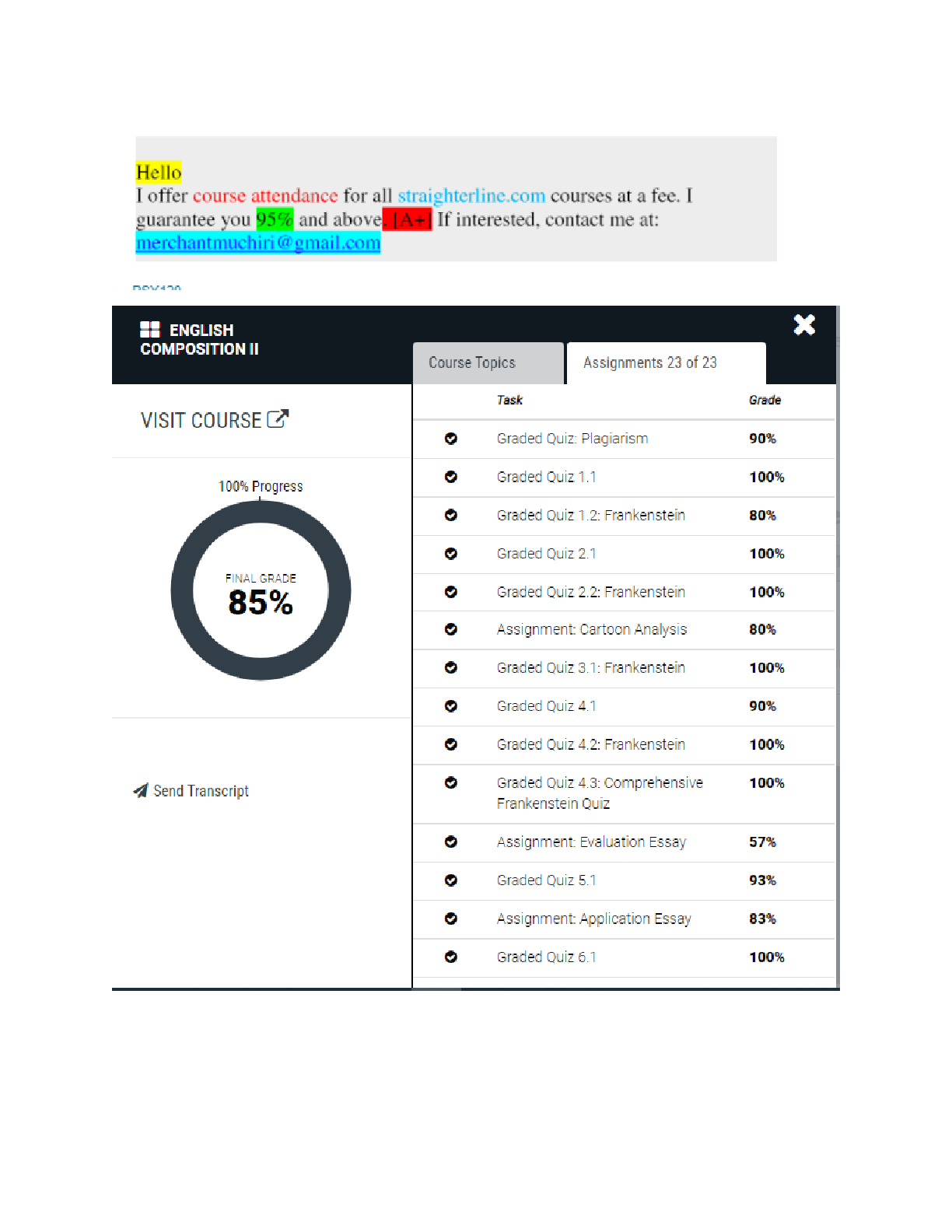

Computer Science > EXAM > CS 221 exam-2015Stanford University ( ALL SOLUTIONS ARE 100% CORRECT ) (All)

CS 221 exam-2015Stanford University ( ALL SOLUTIONS ARE 100% CORRECT )

Document Content and Description Below

CS221 Exam Solutions CS221 November 17, 2015 Name: | {z } by writing my name I agree to abide by the honor code SUNet ID: Read all of the following information before starting the exam: • Thi ... s test has 3 problems and is worth 150 points total. It is your responsibility to make sure that you have all of the pages. • Keep your answers precise and concise. Show all work, clearly and in order, or else points will be deducted, even if your final answer is correct. • Don’t spend too much time on one problem. Read through all the problems carefully and do the easy ones first. Try to understand the problems intuitively; it really helps to draw a picture. • Good luck! Problem Part Max Score Score 1 a 10 b 10 c 10 d 10 e 10 2 a 10 b 10 c 10 d 10 e 10 3 a 10 b 10 c 10 d 10 e 10 Total Score: + + = 11. Two views (50 points) Alice and Bob are trying to predict movie ratings. They cast the problem as a standard regression problem,1 where x 2 X contains information about the movie and y 2 R is the real-valued movie rating. To split up the e↵ort, Alice will create a feature extractor A : X ! Rd using information from the text of the movie reviews and Bob will create a feature extractor B : X ! Rd from the movie metadata (genre, cast, number of reviews, etc.) These features will be used to do linear regression as follows: If u 2 Rd is a weight vector associated with A and v 2 Rd is a weight vector associated with B, then we can define the resulting predictor as: fu ,v(x) def = u · A(x) + v · B(x). (1) We are interested in the squared loss: Loss(x, y, u, v) def = (fu,v(x) # y)2. (2) Let Dtrain be the training set of (x, y) pairs, on which we can define the training loss: TrainLoss(u, v) def = X (x,y)2Dtrain Loss(x, y, u, v). (3) 1Remember that you should be going beyond this for your project! 2a. (10 points) Compute the gradient of the training loss with respect to Alice’s weight vector u. ruTrainLoss(u, v) = Solution ruTrainLoss(u, v) = 2 X (x,y)2Dtrain (fu,v # y)A(x) (4) = 2 X (x,y)2Dtrain (u · A(x) + v · B(x) # y)A(x). (5) Suppose Alice and Bob have found a current pair of weight vectors (u, v) where the gradient with respect to u is zero: ruTrainLoss(u, v) = 0. Mark each of the following statements as true or false (no justification is required). 1. Even if Bob updates his weight vector v to v0, u is still optimal for the new v0; that is, ruTrainLoss(u, v0) = 0 for any v0. # True / False 2. The gradient of the loss on each example is also zero: ruLoss(x, y, u, v) = 0 for each x, y 2 Dtrain. # True / False Solution Neither statement is true: 1. Alice’s weight vector u is only optimal with respect to the particular v. As another example, think of alternating minimization (k-means), where the centroids are only optimal given the current assignments, not for all assignments; otherwise, there would be no point in iterating. 2. The loss gradient is only zero on average, definitely not for each example. 3b. (10 points) Alice and Bob got into an argument and now aren’t on speaking terms. Each of them therefore trains his/her weight vector separately: Alice computes uA = arg min u2Rd X (x,y)2Dtrain (u · A(x) # y)2 and Bob computes vB = arg min v2Rd X (x,y)2Dtrain (v · B(x) # y)2. Just for reference, if they had worked together, then they would have gotten: (uC, vC) = arg min u,v TrainLoss(u, v). Let LA, LB, LC be the training losses of the following predictors: • (LA) Alice works alone: x 7! uA · A(x) • (LB) Bob works alone: x 7! vB · B(x) • (LC) They work together from the beginning: x 7! uC · A(x) + vC · B(x) For each statement, mark it as necessarily true, necessarily false, or neither: 1. LA LB True / False / Neither 2. LC LA True / False / Neither 3. LC LB True / False / Neither Solution We can view LA as the resulting training loss from optimizing over all weight vectors (u, 0), whereas LC optimizes over all (u, v); therefore LC LA. The same logic applies to conclude LC LB. However, we cannot make a comparison between LA and LB; it’s possible that either Alice or Bob’s features get lower training error. Now, let L0A, L0B, L0C be the corresponding losses on the test set. For each statement, mark it as necessarily true, necessarily false, or neither: [Show More]

Last updated: 3 years ago

Preview 1 out of 24 pages

Buy this document to get the full access instantly

Instant Download Access after purchase

Buy NowInstant download

We Accept:

Also available in bundle (1)

Click Below to Access Bundle(s)

Stanford University CS 221 exams (2014, 2015, 2016, 2017, 2018) , aut2018-exam, midterm2015, Midterm Spring 2019

Stanford University CS 221 exams (2014, 2015, 2016, 2017, 2018) , aut2018-exam, midterm2015, Midterm Spring 2019

By Muchiri 4 years ago

$25

8

Reviews( 0 )

$7.00

Can't find what you want? Try our AI powered Search

Document information

Connected school, study & course

About the document

Uploaded On

Apr 15, 2021

Number of pages

24

Written in

All

Additional information

This document has been written for:

Uploaded

Apr 15, 2021

Downloads

0

Views

131